Replacing CouchDB views with ElasticSearch 20 May 2012

I edited this post to provide more context, so that the references to the project internals actually make sense to those who didn’t work on it.

This internal post eventually led to a public blog post, but this is the journal of my concrete experiences with ElasticSearch.

This devlog follows directly on from my post about performance improvements.

The main gist of the previous post, was that I was having trouble fixing some bugs happening during a bulk import, due to the glacial pace the process was running at, and this pace was directly attributed to needing to generate incredibly large CouchDB views.

What started as a straight forward task, forced me to try some very interesting approaches, eventually turning into a completely experimental branch of the project I have been fiddling with on my free time over the last weekend.

We have ElasticSearch available, let’s use it.

Since I had last written about elasticsearch, we have implemented it for use in the search functionality on the background and analysis pages. Knowing the kind of indexes we were building this incredibly slow view for in couchDB, i thought it might be faster, if not more straight forward, to simply index the data with elasticsearch as well, allow us to make use of it’s extensive query capabilities.

One of the concessions I did however make in my experiment, was that I saved the ‘materialized’ latest values and the respective year inside the object in CouchDB. It made not only the queries and indexing simpler, but made a whole bunch of the code around comparisons and displays cleaner too. It would probably have simplified things for the maps too, so I am of the opinion we should probably have done this a long time ago regardless.

About the data

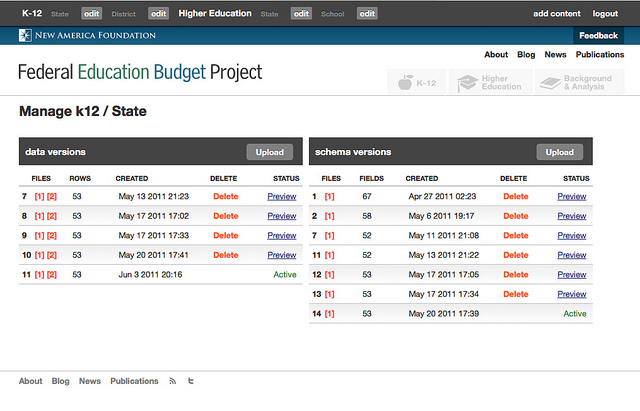

The data for each of the school districts is split into around 65 indicators, which are then split (sparsely) into up to 10 values for each recorded year. The most complex view we have is used to compare each of the school districts to each other, based on these indicators. We end up with 57059 entries in the view for every dataset that is uploaded, and there are multiple datasets in the system at any one time.

Having this amount of data in CouchDB and the views is not a problem, but being in a situation where there is user-initiated batch imports into the system paints a very different performance picture than the traditional user contributed content workflow. What was killing us was having to import > 16k records all at once, not simply having > 16k records in the database.

Read more →